.png)

AI is everywhere. Every day, there’s a new article, a new tool, or a new headline about artificial intelligence. But most explanations are either so simple they don’t teach you anything, or so technical you feel like you need a PhD just to get through the first paragraph.After 20 years in tech, from my early days at WhatsApp to leading engineering teams at Meta, I’ve learned one thing: AI doesn’t have to be complicated. By the end of this article, you’ll understand more about AI than most people, and more importantly, you’ll know how to start using these tools effectively in your own life and work.

Exaltitude newsletter is packed with advice for navigating your engineering career journey successfully. Sign up to stay tuned!

Let’s start with the basics: what is AI?

At its core, AI is about recognizing patterns. It’s about teaching computers to make decisions based on data. Think about your email spam filter. It has studied thousands of examples of emails. When a new one arrives, it looks at patterns and decides: spam or not spam. That’s AI.

When we dig deeper into how AI learns those patterns, we enter the world of machine learning. Here’s a simple analogy: imagine I’m teaching you what a dog looks like, but I only show you golden retrievers. Later, I ask you to recognize a chihuahua. You’d probably miss it, because you’ve only learned one type of dog. Machine learning works the same way. If the training data is too narrow, the results won’t be reliable.

Then there’s deep learning, which is a special type of machine learning that uses many layers of networks to learn. The name sounds brain-like, but these systems don’t really work like human brains. “Deep” just means stacking many layers so the system can learn from massive amounts of examples.

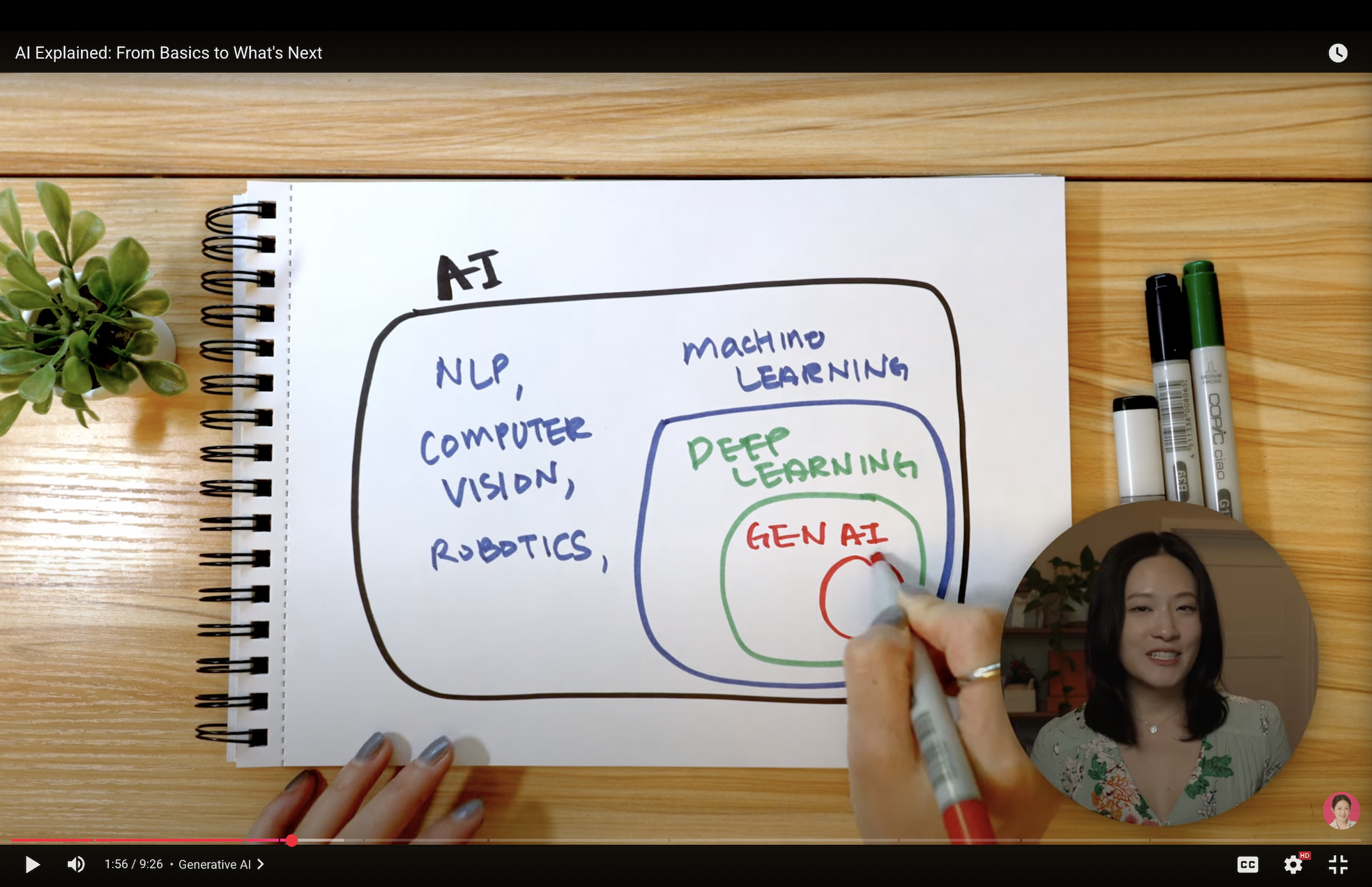

So how do these pieces fit together? Imagine nested circles. The big circle is AI. Inside that circle is machine learning. Inside that is deep learning. And when people talk about AI today, especially generative AI, they’re usually talking about the innermost circle.

Generative AI is different because it can create new content. It can write text, generate images, produce audio, and even create videos. That’s why you see pictures of cats in hats or full essays written in seconds.

The most famous example is the large language model (LLM). Tools like ChatGPT, Google’s Gemini, and Anthropic’s Claude are all LLMs. They process text and generate text in response.

The newest models are multimodal, which means they can handle text, images, audio, and video in one system. But here’s the important part: they only work as well as the instructions you give them. Many people type in a few words, treat AI like a search engine, and then get frustrated with weak results. There’s a much better way.

How to Talk to AI

If you’ve tried using AI and the results felt generic or just “okay,” the problem usually isn’t the tool, it’s how you’re talking to it. This is called prompting.

Most people treat AI like a search engine. They type a few words and hope for the best. But AI isn’t Google. To really take advantage of these tools, you need to learn the art of giving clear, specific instructions. That’s what prompting is all about.

Think of prompting like giving directions to someone who has never been to your city. If you just say, “Get me to the store,” they’ll probably get lost. But if you explain the route step by step, they’ll get you exactly where you need to go.

You can’t just say: “Make me an app.” That’s too vague. Instead, break down what you want. Cover these four areas:

Once you understand prompting, the next step is choosing the right tools. Today, AI coding tools generally fall into two categories:

1. No-Code Tools

These are built for people without a technical background. Platforms like Lovable or Bolt let you describe what you want, and they build it for you, no coding required. They’re perfect for testing an idea quickly, like a weekend app experiment or a simple website for a small business. But they do have limits when you try to build complex, professional-grade applications.

2. Coding Assistant Tools

These are designed for engineers. Tools like Cursor and Claude Code make developers faster by handling repetitive tasks, suggesting improvements, and helping debug errors. They don’t replace engineers, but they free up time to focus on bigger problems.

One tool worth highlighting is Warp, today’s sponsor for this article, redefining how engineers work with AI. With their new release, Warp is the place where the IDE and CLI finally merge into one seamless environment for coding with AI agents.

Warp combines three things into a single workflow:

This matters because it removes friction. Instead of juggling multiple tools, you stay in one environment.

Now, you might think this sounds like Cursor or Claude Code, but here's the difference: Cursor is an AI IDE, and Claude is more of a CLI tool. Warp bridges both worlds.

The performance backs it up, too: Warp ranks top 3 on SWEBenchVerified and #1 on TerminalBench, which means it excels at both coding capability and performance optimization.

For anyone curious, Warp is currently free to try, and they’re offering a promo for their Pro plan at $1 with the code JEAN here 👉 https://go.warp.dev/jeanlee

Now let’s go one step further. Right now, one of the most exciting frontiers is AI agents.

Unlike a chatbot that only answers one question at a time, an AI agent can work toward a goal by taking multiple steps. It can plan, reason, and act without constant human input.

Think of it as an assistant. You could say: “Book me a trip to Seoul next month.” The agent could then research flights, check your calendar, compare hotels, and handle bookings—all without you spelling out each step.

This leap is powered by reasoning models, which break problems into smaller steps and solve them one by one. When you see a chatbot pause and say “thinking,” that’s the model working through the steps before giving you an answer.

Two other concepts are shaping how AI works today:

These behind-the-scenes improvements make AI systems practical for real-world use.

Right now, researchers are chasing something called Artificial General Intelligence (AGI). This means AI that can do any thinking task a human can, just as well as a human.

Beyond that is the idea of Artificial Superintelligence (ASI), an AI that is even smarter than humans and can improve itself. Meta even renamed one of its labs the Meta Superintelligence Lab to show how seriously it takes the idea.

This is where opinions split. Some people, often called “doomers,” believe AGI could arrive as soon as 2027 and might even pose an existential risk to humanity. Others are skeptical and argue AGI is science fiction that will never actually happen.

And then there is a third perspective. Some say AGI itself is more myth than reality. Karen Hao, author of Empire of AI, compares it to Dune. In the film, Lady Jessica spreads a myth to position her son as a messiah. Hao argues that AGI functions in a similar way, as an industry-created myth that helps big tech control billions in funding while distracting us from the real, immediate challenges AI is creating today.

Whichever view you lean toward, one thing is clear: AI is moving fast, and the conversation around AGI is shaping the future of technology.

If you want a deeper dive into this debate, I have a full video breaking down the future of AI. You can check it out here.

Exaltitude newsletter is packed with advice for navigating your engineering career journey successfully. Sign up to stay tuned!

Copyright @Exaltitude